Legal & Step-by-Step ChatGPT Scraping Guide(2026)

Step-by-Step guide to use ChatGPT for scraping: prompts, code, parsing, validation, and compliance.

Jan 30, 2026

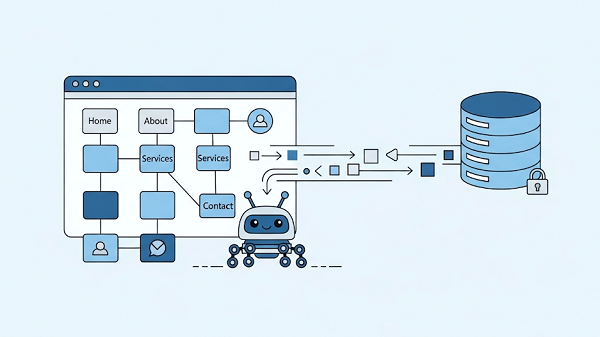

Practical guide to discover, crawl, extract and store all pages safely — includes command-line, Python and headless browser examples.

Scraping an entire website is a common need—for offline archiving, data extraction, content migration, or research. But with stricter anti-scraping measures and evolving technologies like AI-driven defenses, you need to approach it thoughtfully. In this guide, we'll explore practical, ethical, and reproducible ways to scrape an entire website—whether your goal is offline archiving, structured data extraction, site migration, or research. It covers small static sites through JS-heavy and multi-domain scenarios, plus troubleshooting, monitoring, and scale-up guidance.

Web scraping is increasingly integrated with AI for faster, more accurate extraction on complex sites. AI tools can dynamically adapt to site changes, improving success rates. We'll incorporate these insights to keep your methods current.

Web scraping involves automating the extraction of data from websites, simulating how a human browses but at scale. Scraping an "entire" site could mean:

Mirroring for archiving: Downloading all pages, images, and assets to create a local copy (e.g., preserving a defunct blog).

Crawling for data extraction: Systematically visiting every page to pull structured info like articles, prices, or user reviews (e.g., for market research).

Targeted subsets: Focusing on sections like product catalogs, avoiding irrelevant areas to save time and resources.

Check robots.txt (e.g., https://example.com/robots.txt) and respect Disallow.

Review Terms of Service — some sites prohibit scraping for commercial use.

Avoid personal data unless you have explicit consent. Follow applicable privacy laws.

Be polite: throttle requests, add delays, and include a contact in your User-Agent.

If in doubt, get permission or ask for a data export. For large/commercial projects consult legal counsel.

Note: Anti-scraping defenses are evolving (behavioral detection, fingerprinting). Prefer API endpoints or owner-provided exports where available.

Quick mirror (Beginner, 5–30 min): small static sites — wget.

Structured scraping (Intermediate, 30–120 min): requests + BeautifulSoup, or a crawler framework for scale.

JS-heavy sites (Advanced, 1–several hours): headless browsers (Playwright/Puppeteer) or capture API endpoints.

No-code / POC: visual scrapers for fast trials (less control).

Multi-site / web-scale: automation pipelines + distributed workers + proxy management.

Why: Sitemaps are the most reliable source of canonical URLs and avoid duplicates.

Process

1. Check robots.txt for Sitemap: lines.

2. Fetch sitemap.xml (may be an index pointing to multiple sitemaps, sometimes gzipped).

3. If no sitemap, start a breadth-first crawl from the homepage and extract internal links.

4. Use lastmod or changefreq (if present) to prioritize pages.

Robust sitemap fetcher (handles gzipped / index sitemaps):

import requests, gzip, io, xml.etree.ElementTree as ET

def fetch_sitemap(url):

r = requests.get(url, timeout=15, headers={"User-Agent":"site-scraper ([email protected])"})

r.raise_for_status()

content = r.content

if url.endswith(".gz") or r.headers.get("Content-Encoding","") == "gzip":

content = gzip.decompress(content)

root = ET.fromstring(content)

# Find loc elements in any sitemap namespace

return [loc.text for loc in root.findall(".//{*}loc")]

Edge cases: sitemap.xml.gz, sitemap index files, multiple sitemaps per site.

When to use: small, non-dynamic sites (e.g., personal blogs) for a full local copy.

Concrete command (wget)

wget --mirror \

--page-requisites \

--convert-links \

--adjust-extension \

--restrict-file-names=windows \

--domains example.com \

--no-parent \

--wait=5 \

--random-wait \

https://example.com

Flags explained

--mirror: recursion + timestamp checks.

--page-requisites: download images, CSS, JS required to render.

--convert-links: rewrite links for local browsing.

--adjust-extension: add .html where useful.

--no-parent: don’t follow links to parent directories.

--wait / --random-wait: politeness.

Test & verify: open example.com/index.html locally. Check logs for missing assets.

Limitations: client-side JS content may be missing — use headless browsing if needed.

When to use: you need structured fields across many pages (titles, prices, dates).

Core pattern: discover URLs → fetch → parse → store → enqueue links.

Prereqs: Python installed; pip install requests beautifulsoup4.

Minimal sitemap-first crawler (sitemap_crawl.py)

# sitemap_crawl.py

import requests, json, random, time

from xml.etree import ElementTree as ET

from bs4 import BeautifulSoup

from urllib.parse import urljoin, urlparse

SITE = "https://example.com"

SITEMAP = urljoin(SITE, "/sitemap.xml")

OUTFILE = "records.jsonl"

def fetch_sitemap(url):

try:

r = requests.get(url, timeout=15, headers={"User-Agent":"site-scraper ([email protected])"})

r.raise_for_status()

root = ET.fromstring(r.text)

return [loc.text for loc in root.findall(".//{*}loc")]

except Exception as e:

print(f"Error: {e}")

return []

def parse_article(html, url):

try:

soup = BeautifulSoup(html, "html.parser")

title = (soup.select_one("h1") or soup.title).get_text(strip=True)

body = " ".join(p.get_text(" ", strip=True) for p in soup.select("article p"))

return {"url": url, "title": title, "content_snippet": body[:500]}

except Exception as e:

print(f"Parse error: {e}")

return {"url": url, "error": str(e)}

def main():

urls = fetch_sitemap(SITEMAP)

with open(OUTFILE, "w", encoding="utf-8") as fh:

for u in urls:

if urlparse(u).netloc != urlparse(SITE).netloc:

continue

try:

r = requests.get(u, timeout=15, headers={"User-Agent": "site-scraper ([email protected])"})

r.raise_for_status()

record = parse_article(r.text, u)

fh.write(json.dumps(record, ensure_ascii=False) + "\n")

except requests.exceptions.RequestException as e:

print(f"HTTP error for {u}: {e}")

time.sleep(1.5 + random.random()) # Polite random delay

if __name__ == "__main__":

main()

Tips

Use JSONL (newline-delimited JSON) for streaming ingestion.

Start with 5–10 pages to validate selectors.

Add more rigorous error handling and retries for production.

AI enhancement (trend): post-process extracted text with a model to summarize or categorize entries, reducing manual labeling.

When to use: modern single-page apps (SPAs) or sites that only render content client-side.

Prefer API detection first

Open DevTools → Network → filter XHR/Fetch → perform the action that loads data → copy the API call and query it directly.

If no API is available, use a headless browser (Playwright example):

# playwright_extract.py

from playwright.sync_api import sync_playwright

import json

def extract(url):

with sync_playwright() as p:

browser = p.chromium.launch(headless=True)

page = browser.new_page()

page.goto(url, timeout=30000)

try:

json_text = page.locator("script#__NEXT_DATA__").inner_text(timeout=2000)

data = json.loads(json_text)

except Exception:

data = {"html": page.content()}

browser.close()

return data

if __name__ == "__main__":

print(extract("https://example.com/product/123"))

Tips

Capture network requests for API endpoints — calling APIs directly is faster and more stable.

For sophisticated anti-bot defenses, vary navigation timing and user-like interactions; but do not impersonate individuals or harvest private data.

When to use: you begin to see blocks (429/403) or need geo-specific results.

Repeated 429 (Too Many Requests), 403, or sudden CAPTCHA pages.

1. Throttle: increase delays and reduce concurrency.

2. Exponential backoff (safe retry):

import time, requests

def safe_get(url, attempts=5):

for attempt in range(attempts):

try:

r = requests.get(url, timeout=15)

if r.status_code == 200:

return r

except requests.RequestException:

pass

time.sleep(2 ** attempt) # 1s, 2s, 4s...

return None

3. Rotate headers: rotate User-Agent strings and include a contact email in User-Agent where appropriate.

4. Session handling: preserve cookies for session-based sites.

If polite throttling still results in blocks or you need geo-located pages, consider testing a reputable rotating proxy IP service like GoProxy (datacenter, residential, or mobile, depending on needs).

Start with a small pilot (e.g., 1,000 requests) and measure: success rate, average latency, and block rate. Sign up and get a free trial here!

Combine proxies with polite delays, session handling, and robots.txt compliance.

Proxy usage examples

Python requests with a single proxy:

import requests

proxy = "http://user:pass@proxy-host:port"

proxies = {"http": proxy, "https": proxy}

r = requests.get("https://example.com/page", proxies=proxies, timeout=15,

headers={"User-Agent":"site-scraper ([email protected])"})

Playwright with proxy:

from playwright.sync_api import sync_playwright

with sync_playwright() as p:

browser = p.chromium.launch(proxy={"server": "http://proxy-host:port"}, headless=True)

page = browser.new_page()

page.goto("https://example.com")

# ...

browser.close()

Avoid programmatic CAPTCHA solving unless you have a lawful, justified business process. Prefer alternatives: slow down, request API access, or contact the site owner.

For login-required content use valid credentials and maintain session cookies securely. Check the site’s ToS about automated access behind login.

Design a schema before scraping to prevent rework.

Example product table (SQL)

CREATE TABLE products (

id SERIAL PRIMARY KEY,

url TEXT UNIQUE,

title TEXT,

sku TEXT,

price NUMERIC,

currency VARCHAR(8),

scraped_at TIMESTAMP DEFAULT now(),

raw_json JSONB

);

Tips

Store raw HTML/JSON for re-processing.

Write in chunks to avoid memory spikes.

Normalize dates, currencies, and remove HTML artifacts.

Use a search index (OpenSearch/Elasticsearch) for fast retrieval.

Tip: AI-based normalization (entity recognition, currency conversion) can reduce manual cleaning work.

Large crawls get interrupted — design for resume.

Persist visited set and queue every N pages (SQLite, Redis, or JSON file). On shutdown, save state; on restart, load and resume.

Simple checkpoint example (JSON)

import json

def save_state(queue, visited, path="checkpoint.json"):

with open(path, "w", encoding="utf-8") as fh:

json.dump({"queue": queue, "visited": list(visited)}, fh)

def load_state(path="checkpoint.json"):

try:

with open(path, "r", encoding="utf-8") as fh:

s = json.load(fh)

return s["queue"], set(s["visited"])

except FileNotFoundError:

return None, None

from urllib.parse import urlparse, parse_qsl, urlencode, urlunparse

def normalize(url):

p = urlparse(url)

q = [(k,v) for k,v in sorted(parse_qsl(p.query)) if not k.startswith(("utm_","fbclid"))]

path = p.path.rstrip('/')

return urlunparse((p.scheme, p.netloc.lower(), path, "", urlencode(q), ""))

Use canonical (<link rel="canonical">) where available.

pages_processed_total

pages_per_minute (sliding window)

status_counts (200 / 404 / 429 / 5xx)

retries_total

avg_latency_ms

Set alerts when 429/5xx spikes. If blocks increase, slow the crawl and test proxies.

Lots of 429 → increase delays; reduce concurrency; consider proxy pilot.

Missing content after mirror → site is JS-driven; use Playwright or find API endpoints.

Infinite crawl loops → enforce max_depth and normalize/strip tracking params.

CAPTCHAs → back off and try API or contact owner.

For thousands–millions of pages:

Or use public archives (Common Crawl) for research-scale needs.

Q: Will scraping break the site?

A: If you respect robots.txt, add delays, and keep concurrency low, you’re unlikely to harm a site. On small hosts, contact the owner first.

Q: Can I scrape paywalled content?

A: Don’t bypass paywalls. Obtain permission or use provided APIs.

Q: How do I resume a crawl?

A: Persist queue and visited to disk periodically and reload them on startup.

Q: How do I test safely?

A: Use your own site or public sandboxes designed for testing scraping.

Scraping a whole site is practical and safe when planned: start with sitemaps, prefer APIs, extract structured JSON when possible, and escalate tools only when needed. Build checkpointing and observability from day one. For high-volume or sensitive projects, involve legal and infrastructure experts early.

< Previous

Next >

Cancel anytime

Cancel anytime No credit card required

No credit card required