How to Scrape a Whole Site: Step-by-Step Guide for 2026

Practical guide to discover, crawl, extract and store all pages safely — includes command-line, Python and headless browser examples.

Jan 12, 2026

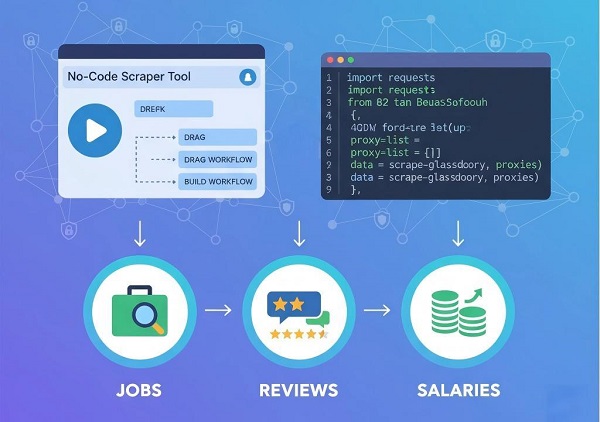

Master scraping Glassdoor for reviews, jobs, and salaries using no-code tools, Python scripts, and proxies to bypass 2025 anti-bot measures.

Glassdoor remains one of key employment data sources in 2025, packed with company reviews, salary insights, job listings, and employee feedback.

However, scraping Glassdoor isn’t without challenges. With AI-driven detection, CAPTCHAs, IP rate limiting, and Cloudflare protections, naive scraping attempts often result in quick failures. In this guide, we’ll cover practical methods, from simple no-code tools to advanced Python scripts, and provide insights into overcoming Glassdoor’s anti-scraping barriers. We’ll also address concerns like legality, proxy setup, and handling dynamic JavaScript content, giving you the tools to scrape ethically and efficiently in 2025.

However, scraping Glassdoor isn’t without challenges. With AI-driven detection, CAPTCHAs, IP rate limiting, and Cloudflare protections, naive scraping attempts often result in quick failures. In this guide, we’ll cover practical methods, from simple no-code tools to advanced Python scripts, and provide insights into overcoming Glassdoor’s anti-scraping barriers. We’ll also address concerns like legality, proxy setup, and handling dynamic JavaScript content, giving you the tools to scrape ethically and efficiently in 2025.

The need for scraping Glassdoor varies across different user groups. Common cases include:

Job Seekers and Career Changers: Pull salary ranges and reviews to inform negotiation or evaluate potential employers.

Recruiters and HR Professionals: Collect employee satisfaction scores, interview questions, and competitive job listings for refined hiring strategies.

Market Analysts and Researchers: Aggregate datasets for trend analysis, such as remote work preferences or industry turnover rates.

Developers and Data Scientists: Feed data pipelines for machine learning models predicting job market shifts.

However, users often encounter several challenges:

Legality: Is it allowed to scrape Glassdoor?

IP Blocks and CAPTCHAs: How to avoid getting blocked?

Coding Complexity: What if you're a beginner without coding experience?

Ethical Use: How to ensure that the data is used responsibly?

We will address these concerns progressively.

Scraping public Glassdoor data for personal or research use is generally legal, provided you follow Glassdoor’s terms of service, respect the robots.txt file, and adhere to privacy laws. Key ethical guidelines include:

Glassdoor’s defenses have evolved in 2025, introducing sophisticated anti-bot technologies:

Login Overlays: Pop-ups demanding logins after a few pages.

IP Blocks/CAPTCHAs: Cloudflare and AI-driven pattern detection that flag scraping attempts.

Dynamic Content: Much of Glassdoor’s data is rendered via JavaScript, making it harder to scrape without specialized tools.

Pagination and Region-Based Content: Infinite scrolling and country-specific content may require advanced techniques.

Proxies are key to solving these challenges. GoProxy offers rotating IPs, making your scraper appear like multiple users, reducing blocks and bypassing geo-restrictions. For stealth, use residential proxies, and for speed, datacenter proxies are a faster, cheaper option.

Based on your goals, here’s a breakdown of the methods you can use to scrape Glassdoor:

| Method | Difficulty | Scale (Pages) | Tools Needed | Time to Set Up | Best For |

| No-Code | Easy | <100 | Octoparse (or similar) | 15–30 mins | One-off jobs, beginners |

| Basic Python | Medium | 100-500 | Selenium/Playwright, BeautifulSoup, GoProxy | 30–60 mins | Recruiters, intermediates |

| Advanced APIs | Hard | 500+ | httpx, parsel, GoProxy, LLM integration | 1–2 hours | Analysts, large datasets |

Start based on your needs and add GoProxy to handle any scale.

If coding intimidates you, no-code tools offer a quick way to get started. These platforms auto-detect fields and handle basic anti-scraping measures.

1. Choose a Tool: Opt for user-friendly platforms like Octoparse (with a free tier available).

2. Install and Set Up: Create a new task in the tool.

3. Enter the URL: Go to Glassdoor’s jobs or reviews page, e.g., Glassdoor Reviews filter by company or keyword.

4. Auto-Detect Data: Let the tool scan for data elements like company names, ratings, and reviews. You can customize the fields to extract specific data like review text, pros/cons, and dates.

5. Handle Pagination: Configure the tool to click “Next” or scroll infinitely. Set a page limit to avoid overloading Glassdoor’s servers.

6. Bypass Basic Blocks: Integrate GoProxy by entering your API key in the proxy settings. Choose residential proxies for better success rates.

7. Run and Export: Execute the task locally or in the cloud and export to CSV/Excel. Test on 5–10 pages first.

Tips for Success

For scraping < 100 pages, you can get by without proxies. However, for larger projects, expect a 20-30% block rate without them—GoProxy can drop this to under 5%. This method suits one-off projects like job seekers scraping reviews.

For more control, scripting provides flexibility and scalability. We’ll use Selenium or Playwright for rendering JavaScript and BeautifulSoup for parsing the HTML content.

Before starting, access rotating residential proxies, essential for staying anonymous while scraping.

1. Create an account with GoProxy.

2. Configure proxy settings in your script to rotate IP addresses automatically.

3. Choose your proxy rotation frequency (e.g., rotate per request or after a set number of requests).

from selenium import webdriver

from bs4 import BeautifulSoup

import pandas as pd

import time

# GoProxy setup

proxy = "http://your-user:your-pass@host:port" # From dashboard; rotate by refetching

options = webdriver.ChromeOptions()

options.add_argument(f'--proxy-server={proxy}')

options.add_argument('--headless') # Hide browser

driver = webdriver.Chrome(options=options)

driver.get("https://www.glassdoor.com/Job/data-analyst-jobs_SRCH_KO0,12.htm")

# Hide overlay

driver.execute_script("document.querySelector('#HardsellOverlay').style.display = 'none';")

time.sleep(2)

# Extract

soup = BeautifulSoup(driver.page_source, 'html.parser')

jobs = soup.find_all('li', class_='job-listing')

data = []

for job in jobs:

title = job.find('a').text.strip() if job.find('a') else 'N/A'

company = job.find('span', class_='companyName').text.strip() if job.find('span', class_='companyName') else 'N/A'

location = job.find('span', class_='location').text.strip() if job.find('span', class_='location') else 'N/A'

salary = job.find('span', class_='salary').text.strip() if job.find('span', class_='salary') else 'Not listed'

data.append({'Title': title, 'Company': company, 'Location': location, 'Salary': salary})

# Paginate (example loop for 5 pages)

for page in range(2, 6):

try:

next_btn = driver.find_element("xpath", '//button[@data-test="pagination-next"]')

next_btn.click()

time.sleep(3 + random.uniform(1, 2)) # Random delay

# Re-parse and append

except:

break

# Save & clean

df = pd.DataFrame(data)

df['Salary'] = df['Salary'].replace('Not listed', pd.NA) # Example cleaning

df.to_csv('glassdoor_jobs.csv', index=False)

driver.quit()

import asyncio

from playwright.async_api import async_playwright

import pandas as pd

import random

async def main():

async with async_playwright() as p:

browser = await p.chromium.launch(headless=True, proxy={'server': 'http://user:pass@host:port'}) # GoProxy

page = await browser.new_page()

await page.goto("https://www.glassdoor.com/Job/data-analyst-jobs_SRCH_KO0,12.htm", timeout=60000)

# Hide overlay (JS eval)

await page.evaluate("document.querySelector('#HardsellOverlay').style.display = 'none';")

await asyncio.sleep(2)

# Extract similar to above, using await page.content() and BS4

await browser.close()

asyncio.run(main())

Blocked IPs: Clear cookies and reattempt with new proxies.

Captcha: Use CAPTCHA-solving services if bypassing with IP rotation isn’t enough.

For large-scale scraping, use GraphQL to target hidden Glassdoor APIs. This method is best suited for analysts who need to collect thousands of records.

1. Prerequisites: pip install httpx parsel.

2. Find IDs: Via typeahead API.

import httpx

import json

response = httpx.get("https://www.glassdoor.com/findPopularCompanies.htm?term=apple")

data = json.loads(response.text)

company_id = data['results'][0]['employerId']

3. Extract State (Fallback for 2025 changes):

import re

def extract_state(html):

match = re.search(r'window\.__APOLLO_STATE__ = (.*?);|__NEXT_DATA__ = (.*?);', html)

return json.loads(match.group(1) or match.group(2))

4. Scrape Reviews:

response = httpx.get(f"https://www.glassdoor.com/Reviews/Company-Reviews-{company_id}.htm", proxies={"http:/": "go-proxy-url"})

state = extract_state(response.text)

reviews = [r for r in state.values() if 'reviewText' in r] # Filter

5. Paginate/Regions: Append ?p=2; set cookies {"tldp": "1"} for US.

6. GoProxy Integration: httpx.Client(proxies="http://user:pass@host:port"); API for rotation.

7. Unpack Refs: Recursive function for GraphQL cache.

Monitor JSON shifts via developer tools.

Rotate user-agents to avoid detection.

Use async functions for large-scale data extraction;LLM (e.g., Gemini) for summaries: Feed reviews for sentiment analysis.

Scraping Glassdoor effectively in 2025 requires a mix of the right tools, strategies, and ethical considerations. Beginners can start with no-code tools for simple tasks, while professionals can take advantage of advanced techniques like GraphQL scraping with rotating proxies. Stay ethical—data empowers, but respect sources.

Ready to get started? Choose your method, set up proxies, and start scraping today!

< Previous

Next >

Cancel anytime

Cancel anytime No credit card required

No credit card required