Playwright Web Scraping with Proxies: 2026 Guide

Step-by-step Playwright guide for web scraping (Node/Python/C#) with proxies, resource blocking, scaling, and production tips for beginners to pros.

Feb 24, 2026

Learn how to combine Vercel AI SDK with proxy to build scalable, real-time AI apps. Step-by-step code, streaming, structured outputs, agent workflows, scaling, and troubleshooting.

A clear mental model of where proxies belong in AI workflows (e.g., scraping/MCP vs. LLM API calls).

Beginner → Intermediate → Advanced, copy-ready examples using Vercel AI SDK (version 5+) and GoProxy (env vars, code, streaming, structured outputs).

Operational tips: session stickiness, proxy rotation, monitoring, cost/ROI, and legal cautions.

Troubleshooting recipes for common failure modes (timeouts, CAPTCHA, invalid JSON, stream slowdowns).

The Vercel AI SDK is a TypeScript toolkit for building AI-powered apps, offering a unified API to interact with LLM providers like OpenAI, Anthropic, or Google Gemini. It simplifies tasks like streaming responses, generating structured data with Zod schemas, and creating agentic workflows that call external tools.

Proxies are essential when scaling web-based AI applications. Services like GoProxy provide rotating residential & mobile IPs to fetch web data reliably and anonymously, which is crucial for bypassing geo-restrictions, CAPTCHAs, or blocks.

There are two main roles for proxies in these stacks:

Your AI agent or tool fetches live web data (e.g., product pages, news, SERPs) through proxies. This is the high-value case we'll focus on, as it enriches AI outputs with real-time data.

Routing provider traffic through proxies adds latency and complexity; use only for special enterprise needs like compliance or networking.

This guide emphasizes proxying web access to feed your SDK tools, helping you build data-enriched AI apps without getting blocked.

If your use case involves fetching public data at volume → Use Path 1 with rotating proxies.

If it requires sessions/logins → Use Path 1 with sticky proxies.

If proxying LLM calls is mandatory (e.g., for firewall traversal) → Use Path 2, but test latency first.

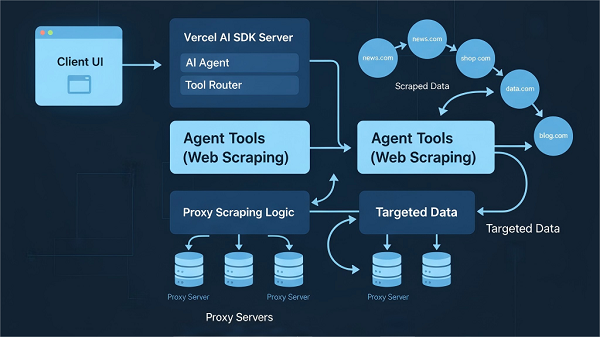

Client UI ←→ Vercel AI SDK (server) ←→ Agent tool (web_scrape) → GoProxy → Target site.

Client UI ←→ Vercel AI SDK → Provider API (optionally via proxy)

Proxies sit between your scraping tools and target sites. The SDK then processes scraped results (e.g., HTML/text/JSON) to generate structured outputs or streaming chat.

Node.js (v14+), pnpm/npm/yarn.

Vercel AI SDK: pnpm i [email protected] (we'll use version 5+ for the latest features; see migration guide if upgrading).

Zod for schemas: pnpm i zod.

A GoProxy account (sign up for a 7-day free trial; get credentials like username/password). GoProxy offers 90M+ real IPs from premium ISPs (e.g., AT&T) with 99.99% uptime and flexible pricing.

Provider credentials for your LLM (e.g., OpenAI key).

Basic knowledge of TypeScript/Node and server API routes.

streamText / streamObject: Progressive streaming for faster UX.

generateObject: Requests typed JSON using Zod schemas.

Tool calling: Agents call external functions (e.g., web scrape) for data.

Sticky vs. Rotating proxies: Sticky maintains the same IP (for sessions/logins); rotating changes per request (for large-scale scraping).

Goal: Call Vercel AI SDK to generate a response including live web facts fetched via GoProxy. And we'll build on this by adding structure in the next section.

Create a .env file in your project root:

env

# LLM provider (e.g., OpenAI)

OPENAI_API_KEY=your_openai_key

# GoProxy credentials (placeholders; get from your dashboard)

GOPROXY_HOST=proxy.goproxy.com

GOPROXY_PORT=8000

GOPROXY_USER=your_goproxy_user

GOPROXY_PASS=your_goproxy_pass

Note: Store secrets securely (e.g., using Vercel Secrets in production).

pnpm i [email protected] zod axios https-proxy-agent @ai-sdk/openai dotenv

Create server/scrapeAndAsk.ts:

typescript

import axios from 'axios';

import { HttpsProxyAgent } from 'https-proxy-agent';

import { generateText } from 'ai';

import { openai } from '@ai-sdk/openai';

import dotenv from 'dotenv';

dotenv.config();

function createProxyAgent() {

const proxyAuth = `${process.env.GOPROXY_USER}:${process.env.GOPROXY_PASS}`;

const proxyUrl = `http://${proxyAuth}@${process.env.GOPROXY_HOST}:${process.env.GOPROXY_PORT}`;

return new HttpsProxyAgent(proxyUrl);

}

export async function scrapeThenAsk(url: string, promptExtra = '') {

const agent = createProxyAgent();

try {

const resp = await axios.get(url, {

httpsAgent: agent,

timeout: 20000,

headers: { 'User-Agent': 'Mozilla/5.0 (compatible; MyScraper/1.0)' },

});

const html = resp.data;

const prompt = `

Extract the product title and the first price you find from the HTML below. Return in plain text.

${promptExtra}

HTML:

${html.slice(0, 20000)} // Trim if very large

`;

const { text } = await generateText({

model: openai('gpt-4o'),

prompt,

});

return text ?? '';

} catch (error) {

console.error('Error:', error.message);

return 'Failed to scrape or generate.';

}

}

Run node server/scrapeAndAsk.ts (pass URL as arg or hardcode) and verify output in console.

Building on the beginner example, add type-safe outputs and real-time UX.

Use generateObject for reliable fields (e.g., title, price).

Create server/extractProduct.ts:

typescript

import { generateObject } from 'ai';

import { z } from 'zod';

import { createProxyAgent } from './utils'; // Reuse from quickstart

import axios from 'axios';

import { openai } from '@ai-sdk/openai';

const ProductSchema = z.object({

title: z.string(),

price: z.string(),

inStock: z.boolean(),

currency: z.string().optional(),

});

export async function extractProductData(url: string) {

const agent = createProxyAgent();

try {

const r = await axios.get(url, { httpsAgent: agent, timeout: 20000 });

const html = r.data;

const { object } = await generateObject({

model: openai('gpt-4o'),

prompt: `Extract title, price, inStock (true/false), and currency from the HTML below. Return JSON only.

HTML: ${html.slice(0, 20000)}`,

schema: ProductSchema,

});

return object; // Typed as per schema

} catch (error) {

console.error('Error:', error.message);

return null;

}

}

Use streamText for conversational apps. Here's a server-side stream with client integration (e.g., in Next.js).

Server (api/stream/route.ts):

typescript

import { streamText } from 'ai';

import { openai } from '@ai-sdk/openai';

import { NextResponse } from 'next/server';

export async function POST(req: Request) {

const { prompt } = await req.json();

const result = await streamText({

model: openai('gpt-4o'),

prompt,

});

return result.toTextStreamResponse();

}

Client-side (react with useChat hook):

tsx

import { useChat } from 'ai/react';

export default function Chat() {

const { messages, input, handleInputChange, handleSubmit } = useChat({

api: '/api/stream',

});

return (

<div>

{messages.map(m => (

<div key={m.id}>{m.role}: {m.content}</div>

))}

<form onSubmit={handleSubmit}>

<input value={input} onChange={handleInputChange} />

<button type="submit">Send</button>

</form>

</div>

);

}

Tip: For streamObject, use if needing progressive structures, but validate at end if slow—shorten schemas or stream plain text first.

Next, we'll scale these with agents (e.g., chaining, orchestration). Use AI SDK's tool calling for agentic loops.

Orchestrator → Worker: Orchestrator assigns tasks(e.g., scraping via proxies); workers handle the actual scrape via proxies (isolate with sub-pools).

Chunking & Pagination: Split large scrapes; distribute across rotating proxies.

Sticky Sessions for Stateful Flows: Use sticky proxies for logins; add stopWhen in tools for loops.

Rotating pool for high-volume scraping.

Sticky residential for sessions (e.g., logged-in pages).

Monitor success per pool: Rotate away IPs/regions if low.

Implement exponential backoff for retrying:

typescript

async function retryFetch(url, maxRetries = 3) {

for (let i = 0; i < maxRetries; i++) {

try {

// Fetch logic here (e.g., axios with proxy)

return data;

} catch (error) {

if (i === maxRetries - 1) throw error;

await new Promise(res => setTimeout(res, 2 ** i * 1000));

}

}

}

Track CAPTCHA/403; switch pools if >10%. Use circuit breakers (e.g., via circuit-breaker-js lib).

Checklist:

Log in to GoProxy, go to your dashboard, and get your API credentials. (Sign up and get your free trial: 100MB residential for 7 days ).

Choose a pool based on your use case: Rotating residential (public pages), Sticky/residential (sessions), Mobile (fingerprint needs).

Env vars example (add to .env):

GOPROXY_POOL=rotating-residential

GOPROXY_REGION=us # Geo-target

Using in Node: See quickstart for axios/fetch. For Puppeteer:

typescript

import puppeteer from 'puppeteer';

const browser = await puppeteer.launch({

args: [`--proxy-server=http://${process.env.GOPROXY_HOST}:${process.env.GOPROXY_PORT}`],

});

const page = await browser.newPage();

await page.authenticate({ username: process.env.GOPROXY_USER, password: process.env.GOPROXY_PASS });

await page.goto('https://example.com');

Warm-up: Request homepages first. Randomize headers/throttle.

Monitoring: Collect requests/min, success rate (2xx), CAPTCHA freq, latency, LLM token cost.

Problem 1: generateObject returns invalid JSON or fails validation.

Fixes: Simplify schema (1 field first). Pre-clean HTML with Cheerio. Use streamText, then parse/validate server-side.

Problem 2: Streaming is slow/blocks on validation.

Fixes: Stream plain text to UI; validate offline. Shorten schema or accept partials.

Problem 3: High CAPTCHA/403 from target site.

Fixes: Switch to sticky residential. Reduce concurrency; add delays. Rotate user agents.

Problem 4: Too many LLM tokens / cost explosion.

Fixes: Cache HTML/JSON. Pre-filter content. Use small models for parsing, large for synthesis.

Measure tradeoffs with these KPIs:

| Metric | How to Measure | Target Value |

| Cost per Extraction | (Proxy + LLM Costs) / Extractions | <$0.01 |

| End-to-End Latency | Timestamp from request to response | <5s |

| Success Rate | Successful scrapes / Total | >95% |

| Coverage | Geos/pages scraped successfully | 100% target |

| False Extraction Rate | Invalid fields / Total extractions | <5% |

Respect robots.txt (use robots-parser npm package to check dynamically) and site Terms. Scraping may be forbidden; assess legal risk.

Don’t store PII without consent; comply with GDPR/CCPA.

Secure keys & API credentials: Never commit .env; use managers.

Rate limit to avoid abuse. Proxies aid anonymity but don't legalize prohibited actions.

This guide provides a conceptual frame plus actionable code to get from idea to prototype fast. Experiment with GoProxy's trial, deploy on Vercel, and measure KPIs. For advanced, explore AI SDK's full docs.

< Previous

Next >

Cancel anytime

Cancel anytime No credit card required

No credit card required